ACLU’s Gillmor on privacy: ‘We pay for what we value’ (Q&A)

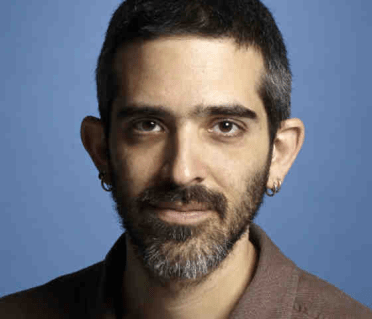

SAN FRANCISCO—Can something as mundane as modern Web hosting be used to increase consumer privacy? Daniel Kahn Gillmor, a senior staff technologist at the ACLU’s Project on Speech, Privacy, and Technology, thinks so. He also believes that the future of consumer privacy depends on technology providers taking bolder steps to protect their users.

At a recent conference held here by the content delivery network company Fastly, Gillmor spent 20 minutes explaining a set of technology proposals that a modern Web host like Fastly can undertake to defend privacy—without burying itself in costly changes.

“The adversaries who are doing network monitoring tend to focus on metadata, not on content,” he told the crowd of engineers about the essential tracking data created when we write emails, watch cat videos online, or text emojis. The importance of metadata to surveillance was underscored by former National Security Agency Director Michael Hayden in 2014, when he declared, “We kill people based on metadata.”

Gillmor explained how a content delivery network, or CDN, could combine new Internet traffic analysis countermeasures and Domain Name System obfuscation to help prevent spies from snooping on consumers’ Internet activities. Gillmor’s talk was more of a pitch about what a CDN can do than what Fastly is actually doing.

After Gillmor’s presentation, he and I spoke at length about three of today’s biggest challenges to consumer privacy: rising costs, responsibilities of private companies to their users, and struggles to make email more safe and private.

What follows is an edited transcript of our conversation.

Q: There seems to be a growing digital divide over privacy technology. What’s your perspective?

My biggest fear is that we’re going to accept, as a society, that privacy is a luxury. You see that already, in many situations. Someone who can afford a home has more privacy than someone who can’t afford a home. This is not just a digital-divide thing; it’s a general situation where people buy privacy for themselves. It’s unjust.

Some services people buy are intended to help keep you off others’ radar. (And some of them actually are invasive.) And a lot of people don’t even actively consider privacy when making purchasing decisions. So there’s not enough of a market, in some sense, for privacy-preserving technologies.

Which ostensibly privacy-preserving technologies are people are buying that might actually be compromising them? Virtual private networks?

If you can’t afford a VPN, most of your connections are going out in the clear, which means that your network provider has an opportunity to surveil you and build profiles about you.

But if everyone gets a VPN, all network traffic would get concentrated at a few VPN companies instead of at the various Internet service providers. And you could monitor everybody’s traffic just by monitoring the VPNs, instead of all the different on-ramps.

And if you had a big budget and wanted to do a lot of monitoring, you could even set up your own VPN and sell access. Brand and market it, and then maybe I’m paying you to harvest my data.

Another consideration: What privacy controls do we have on existing VPN services we might buy? They should be subject to the same constraints that we would like to put on the ISPs, because they are in the position to see all of the different stuff that we do online. That’s a different perspective than a network service that you may or may not decide to use.

Tor is the exception to this rule because it’s free and designed to reduce tracking, right?

There’s a bunch of mythology around Tor. But if you want to play around with it, it’s really not that hard. You go to TorProject.org, download the browser, and use it to browse the Web.

It’s a little bit slower than what people usually expect from a Web browser. But Tor developers have really thought carefully, not just about how to route network traffic, but also about what browsers do and how they pass traffic. Tor really does provide a significant amount of user privacy.

“We have a responsibility as engineers to try to fix the systems people actually use.”

In dealing with cookies, for example, it uses “double-keyed cookies.” The typical browser makes a request, the origin sends back the page, and the page refers to several subresources such as images or video. It sends them with cookies [a small piece of computer data that can track behavior on the Web], which might come from a third party such as an ad server.

So if I visit a site, make a request from a third-party server, then visit another site that uses the same third-party server, that third party can identify me as the same person because of the identical cookies I send.

The Tor browser ensures that the cookies you send different sites don’t match. I think it would be better to just not send cookies at all, but the Web has evolved such that there are things like authentication schemes that don’t work, if you don’t send any cookies to a third party. This is something Tor does through its browser. It’s independent from its network traffic obfuscation.

If you’re interested in getting the most developed set of privacy preservation tools that have been thought about, researched, and well implemented, Tor is the place to get it. As part of the “Tor uplift” to integrate features from the Tor browser back into Firefox, Mozilla has added double-keyed cookies into Firefox as an opt-in. This is a good example of how collaboration between noncompany technology providers can add functionality for a wide swath of users.

For instant messaging, people should be using Signal. And if they’re not using Signal, they should use WhatsApp.

What about for email?

I’m involved with an effort to try to do a similar thing for email called Autocrypt. We have had email encryption technology available to us for 20 years. But encrypting email is painful.

So painful that the creator of email encryption tells people to stop using email to send sensitive data.

Phil Zimmerman doesn’t use it anymore. He says people should stop using it, but the fact is, that won’t happen. And he knows that.

We have a responsibility as engineers to try to fix the systems people actually use. It’s one thing for us to say, “Quit it.” And it’s another thing to say, “OK, we get it. You need email because email works in all these different ways.”

I think we have a responsibility to try to clean up some of our messes, instead of saying, “Well, that was a mistake. All of you idiots who are still doing what we told you was so cool two years ago need to stop doing it.”

We need to actually support it. This is a problem that I call the curse of the deployed base. I take it seriously.

“I expect to get a lot of shit, frankly, from some other members of the encrypted-email community.”

The Autocrypt project is run by a group of email developers who are building a consensus around automated methods to give people some level of encrypted email without getting in their way.

Some of us deeply, intimately know the thousand paper cuts that come with trying to get encrypted email setup. We asked, “What’s the right way to get around that for the majority of people?” And the answer we’ve come up with isn’t quite as good as traditional encrypted email, from a security perspective. But it isn’t bad.

When someone asks me how to use email encryption, I’d like to one day be able to tell him to use an Autocrypt-capable mail client, then turn on the Autocrypt feature.

From a solutions perspective, we don’t necessarily handle everything correctly. But no one does traditional encrypted email properly. And encrypted email is a two-way street. If you want people to be able to do it, the people with whom you correspond need to also be doing it.

I expect to get a lot of shit, frankly, from some other members of the encrypted-email community. Five years ago, I would have said Autocrypt sounds dangerous because it’s not as strong as we expect. That is, I might have been inclined to give people shit about a project like Autocrypt. However, I think that imperfect e-mail encryption with a focus on usability will be better protection than what we currently have, which is actually clear text for everyone, because no one can be bothered to use difficult e-mail encryption.

How important is it for consumers to understand who’s targeting them?

This is the other thing that I feel like we don’t have enough of a developed conversation around. I’m a well-off white guy, working for a powerful nonprofit in the United States. We’re not as powerful as we’d like to be, and we obviously don’t win as many of the fights that we would like to win. But I don’t feel that I’m personally, necessarily, a target.

Other people I talk to might be more targeted. I am responsible for pieces of infrastructure as a Debian [Linux] developer that other people rely on. They might be targeted. I could be targeted because they’re being targeted.

When we talk about threats, we take an individualistic approach when, in fact, we have a set of interdependencies. You and I exchange emails, and all of a sudden, someone who wants access to your emails can go attack my email.

“We haven’t yet seen a sufficient shift to companies treating user data as a responsibility, instead of just as a future pot of money.”

It used to be that I would set up a server, and you would connect to it to view my site. There were network intermediaries, but no CDN. Now there are both, and the CDN’s privacy is my privacy is your privacy. All of these things are intermixed.

You have to think about the interdependencies that you have, as well as the threat model of the people who depend on you. There’s responsible data stewardship—I don’t think that people think about that actively.

My hope is that every organization that holds someone else’s data will see that data as a liability to be cared for, as well as an asset. Most people today see other people’s data as an asset because it will be useful at some point. Companies build venture capital on the basis of their user base, and on the assumption that you can monetize the user base somehow. Most of the time, that means sharing data.

We haven’t yet seen a sufficient shift to companies treating user data as a responsibility, instead of just as a future pot of money. How do we ensure that organizations in this middleman position take that responsibility seriously? We can try to hold them publicly accountable. We can say, “Look, we understand you have access to this data, and we want you to be transparent about whom you leak it to. Or give it to.”

I’ve been happy to see large companies make a standard operating procedure of documenting all the times they’ve had data requested by government agencies, but I don’t think it’s adequate. It doesn’t cover who they’ve actually sent data to in commercial relationships.

A big challenge to the effort to protect consumers from hacking and spying is the effort to encrypt metadata. Where does it stand today?

It’s complicated by a lot of factors.

First, what looks like content to some layers of the communications stack might look like metadata to other layers. For example, in an email, there is a header that says “To,” and a header that says “From.” From one perspective, the entire email is content. From another, the “To” and the “From” are metadata. Some things are obviously content, and some things are obviously metadata, but there’s a vast gray area in the middle.

When you’re talking about metadata versus content, it helps to be able to understand that the network operates on all these different levels. And the idea of encrypting metadata doesn’t necessarily fit the full bill.

In terms of the size and timing of packets, for example, say you sent K bytes to me. You cannot encrypt the number. But you can obfuscate it.

Take profile pictures. If you’re serving up a cache of relatively static data like avatars, you can serve every avatar at the same size.

Can you essentially hide other forms of metadata that can’t be encrypted?

You can’t obfuscate an Internet Protocol address.

When I send you traffic over IP, the metadata at the IP layer is the source and destination address. If you encrypted the destination address, the traffic wouldn’t reach the destination. So somebody has to see some of the metadata somewhere. And practically, realistically, I have no hope of encrypting, or protecting, the sending address. But maybe I don’t need to present the source address.

Whether you’re padding existing traffic to hide the size of the information transferred, or making changes to how domain name servers operate, what are the associated costs? Additional traffic isn’t free, right?

It’s hard to measure some of the costs. But you’d measure padding to defend against traffic analysis in terms of throughput.

Imagine that your DNS was already encrypted. We know how to do it; we have the specification for it. Are we talking about an extra 5 percent of traffic? Or are we talking about an extra 200 percent or 2,000 percent of traffic? And if we’re talking about DNS, what’s the proportion of that traffic relative to the proportion of all of the other traffic?

DNS traffic is peanuts compared to one streamed episode of House of Cards.

Some traffic analysis savant will come along and say, “We found a way to attack your padding scheme,” which is great. That’s how the science advances. But it might cost your adversary two to three times more to decipher, because of the padding.

If we step back from that, let’s ask about other costs. Have you looked at the statistics for network traffic with an ad blocker versus no ad blocker?

Your browser pulls significantly less traffic, if it doesn’t pull ads. And yet, as a society, we seem to have decided that the default should be to pull a bunch of ads. We’ve decided that the traffic cost of advertising, which is more likely to be privacy-invasive, is worth paying.

So yes, metadata padding will cost something. I’m not going to pretend that it doesn’t, but we pay for what we value.

And if we don’t value privacy, and thus don’t pay for it, there will be a series of consequences. As a society, we’ll be less likely to dissent. We’ll be more likely to stagnate. And, if we feel boxed in by surveillance, we’ll be less likely to have a functioning democracy.